Comprehensive Guide toLarge Language Models

Explore the fundamentals, architecture, and advanced concepts of Large Language Models. From transformer mechanics to implementation strategies, this guide covers everything you need to understand modern LLM technology.

What is GPT? The Foundation of Generative AI

Understanding the technology that powers modern AI assistants

At the heart of modern Generative AI is GPT (Generative Pre-trained Transformer), a family of language models that can generate human-like text. GPT models are trained on vast amounts of text data and can predict the next word in a sequence, making them ideal for tasks like answering questions, writing essays, and even coding.

How GPT Works

It uses a transformer architecture to process and generate text, focusing on the relationships between words in a sentence. The model learns patterns and context from massive datasets, allowing it to generate coherent and contextually relevant text.

Applications

Chatbots

Powering conversational interfaces that can understand and respond to user queries

Content Generation

Creating articles, summaries, and creative writing based on prompts

Code Assistance

Helping developers write, debug, and understand code through tools like GitHub Copilot

Understanding Transformers: The Brain Behind GPT

Exploring the neural network architecture that powers modern language models

Transformers are the backbone of models like GPT. They are neural networks designed to handle sequential data, such as text, and are known for their ability to capture long-range dependencies in data.

Types of Transformers

- Focus on understanding text (e.g., sentiment analysis, classification)

- Ideal for tasks where the goal is to analyze input text rather than generate new text

- Examples include BERT, RoBERTa, and DistilBERT

Short-Term vs. Long-Term Memory in AI

Understanding how AI models remember and process information

AI models have two types of memory that affect how they process and retain information. Understanding these memory types is crucial for building effective AI assistants.

- Refers to the model's ability to remember context within a single conversation or input

- Example: A chatbot remembers the current conversation but forgets it once the session ends

- Limited by the context window of the model (e.g., 8K tokens for some models)

- Useful for maintaining coherence in ongoing conversations

- Refers to the model's ability to retain information across multiple sessions or tasks

- Example: A model fine-tuned on a company's knowledge base can "remember" that information for future use

- Implemented through techniques like fine-tuning, retrieval-augmented generation (RAG), or vector databases

- Our knowledge assistant needs long-term memory to access the company's knowledge base effectively

RAG Architecture: Combining Knowledge and Creativity

From basic retrieval to advanced agent-based systems

Retrieval-Augmented Generation (RAG) is a powerful architecture that combines retrieval-based and generative approaches to build accurate and reliable AI assistants. Let's explore the evolution from Native RAG to Agentic RAG.

Native RAG: The Foundation

Native RAG, the current standard in AI-powered information retrieval, employs a straightforward yet effective pipeline:

This linear process combines retrieval and generation methods to deliver contextually relevant answers, setting the stage for more advanced implementations.

How Native RAG Works

1. Retrieval

The system searches a knowledge base (e.g., company documents) for relevant information

2. Generation

The retrieved information is fed into a generative model (e.g., GPT) to produce a coherent answer

Benefits of Native RAG

- It allows the assistant to pull accurate information from the knowledge base and generate human-like responses

- Reduces hallucinations by grounding responses in factual information

- Combines the best of both worlds: the accuracy of retrieval-based systems and the fluency of generative models

- Can be updated with new information without retraining the entire model

Agentic RAG: The Game Changer

Agentic RAG is an advanced, agent-based approach to question answering over multiple documents in a coordinated manner. It introduces a network of AI agents and decision points, creating a more dynamic and adaptable system:

This advanced approach introduces intelligent routing, adaptive processing, and continuous improvement mechanisms, creating a system capable of handling complex queries and continuously improving its responses.

Key Components and Architecture

Each document is assigned a dedicated agent capable of answering questions and summarizing within its own document.

A top-level agent manages all the document agents, orchestrating their interactions and integrating their outputs to generate a coherent and comprehensive response.

The Agentic Advantage

The system can determine whether to use internal knowledge, seek external information, or leverage language models based on the query type.

Incorporates relevance checks and query rewriting capabilities to refine and improve the information retrieval process dynamically.

Features and Benefits

By incorporating multiple checkpoints and decision points, Agentic RAG significantly improves the relevance and accuracy of responses.

Seamlessly combines internal data, web searches, and language model capabilities to provide comprehensive answers.

The query rewrite mechanism allows the system to learn and adapt, improving its performance over time with each interaction.

Applications

Agentic RAG is particularly useful in scenarios requiring thorough and nuanced information processing and decision-making, such as:

- Complex research tasks requiring synthesis across multiple documents

- Legal document analysis and contract review

- Medical literature review for clinical decision support

- Financial analysis and investment research

- Technical troubleshooting across multiple knowledge sources

Agentic AI: Autonomous and Goal-Oriented Systems

Building AI systems that can act independently to achieve specific goals

To make the assistant more advanced, we introduce Agentic AI, which refers to AI systems that can act autonomously to achieve specific goals. These systems can plan and execute tasks, adapt to new information, and interact with other systems or humans.

Break down complex tasks into manageable steps and execute them in sequence

Update strategies and approaches based on new data or changing circumstances

Communicate with other systems, APIs, and humans to accomplish tasks

Examples of Agentic AI Capabilities

- Automatically update the knowledge base with new information

- Proactively suggest relevant documents to employees based on their work

- Escalate complex queries to human experts when necessary

- Perform multi-step tasks like data analysis or report generation

- Learn from user interactions to improve future responses

Model Context Protocol (MCP): Standardizing AI Integration

An open protocol that standardizes how applications provide context to LLMs

The Model Context Protocol (MCP) is an open protocol that standardizes how applications provide context to LLMs. Think of MCP like a USB-C port for AI applications. Just as USB-C provides a standardized way to connect devices to peripherals, MCP provides a standardized way to connect AI models to different data sources and tools.

Why MCP?

A growing list of pre-built integrations that your LLM can directly plug into

Example: Connect your LLM to a database, API, or knowledge base with just a few lines of code

The ability to switch between LLM providers and vendors without rewriting your application

Example: If you start with GPT-4 but later want to try Claude, MCP makes the transition seamless

Guidelines for securing your data within your infrastructure, ensuring compliance with privacy and security standards

Example: Encrypt sensitive data before sending it to the LLM

General Architecture

At its core, MCP follows a client-server architecture where a host application (client) can connect to multiple servers. This architecture enables:

- Clients: Applications or agents that interact with the LLM

- Servers: Services that provide context, tools, or data to the LLM

For example:

- A knowledge assistant (client) connects to a document server (for retrieval) and a tool server (for executing actions)

- The LLM acts as the brain, while the servers provide the necessary context and tools

The MCP Server: A Foundational Component

Understanding the server-side implementation of the Model Context Protocol

The MCP Server is a foundational component in the MCP architecture. It implements the server-side of the protocol and is responsible for providing tools, resources, and capabilities to clients.

1. Exposing Tools

Clients can discover and execute tools provided by the server

Example: A tool for retrieving documents from a knowledge base

2. Managing Resources

Resources are accessed using URI-based patterns, making it easy to organize and retrieve data

Example: Accessing a specific document using a URI like mcp://knowledge-base/document123

3. Providing Prompt Templates

The server can store and serve prompt templates, which clients can use to generate consistent and high-quality inputs for the LLM

Example: A template for summarizing a document

4. Handling Prompt Requests

The server processes prompt requests from clients and returns the generated responses

Example: A client sends a prompt to summarize a document, and the server returns the summary

5. Supporting Capability Negotiation

The server and client negotiate capabilities to ensure compatibility and optimal performance

Example: The server informs the client about the tools and resources it supports

6. Implementing Server-Side Protocol Operations

The server handles protocol-specific operations, such as authentication, logging, and notifications

Example: Logging all interactions for auditing and debugging

7. Managing Concurrent Client Connections

The server supports multiple clients simultaneously, ensuring scalability and reliability

Example: Handling requests from hundreds of employees using the knowledge assistant

8. Providing Structured Logging and Notifications

The server logs all interactions and sends notifications for important events

Example: Notifying administrators when a tool fails or a resource is accessed

The MCP Client: Interacting with MCP Servers

Understanding the client-side implementation of the Model Context Protocol

The MCP Client is a key component in the Model Context Protocol (MCP) architecture, responsible for establishing and managing connections with MCP servers. It implements the client-side of the protocol, handling various aspects of communication and interaction.

1. Protocol Version Negotiation

Ensures compatibility between the client and server by negotiating the protocol version

Example: The client and server agree to use the latest version of MCP for communication

2. Capability Negotiation

Determines the features and tools supported by the server

Example: The client discovers that the server supports document retrieval and summarization

3. Message Transport and JSON-RPC Communication

Facilitates communication between the client and server using JSON-RPC, a lightweight remote procedure call protocol

Example: The client sends a request to the server to retrieve a document, and the server responds with the document content

4. Tool Discovery and Execution

Discovers tools exposed by the server and executes them as needed

Example: The client discovers a tool for searching the knowledge base and uses it to find relevant documents

5. Resource Access and Management

Accesses and manages resources provided by the server using URI-based patterns

Example: The client retrieves a document using its URI (mcp://knowledge-base/document123)

6. Prompt System Interactions

Interacts with the server's prompt system to generate high-quality inputs for the LLM

Example: The client sends a prompt to the server to summarize a document, and the server returns the summary

7. Optional Features

Supports advanced features like roots management (organizing resources hierarchically) and sampling support (for probabilistic outputs)

Example: The client uses roots management to organize documents by department and sampling support to generate multiple possible answers

Spring-AI MCP Server Integration

Extending the MCP Java SDK for Spring Boot applications

The Spring-AI MCP Server integration extends the MCP Java SDK to provide auto-configuration for MCP server functionality in Spring Boot applications. This integration makes it easy to build and deploy MCP servers in a Java-based environment.

Key Features of Spring-AI MCP Server

Simplifies the setup and configuration of MCP servers in Spring Boot applications

Example: Automatically configuring the server to expose tools and resources

Supports both synchronous and asynchronous APIs, allowing for flexible integration in different application contexts

Example: Using asynchronous APIs for handling long-running tasks like document summarization

Built on the robust Spring Boot framework, ensuring high performance and reliability

Example: Handling thousands of concurrent client connections

LLMsLarge Language Models

The foundation of modern AI systems that power intelligent applications

Large Language Models (LLMs) are the backbone of modern AI systems, enabling applications like chatbots, content generation, and code assistance. Let's explore the architecture and key components that make these models work.

Architecture of LLMs

Core Components and Processing Flow

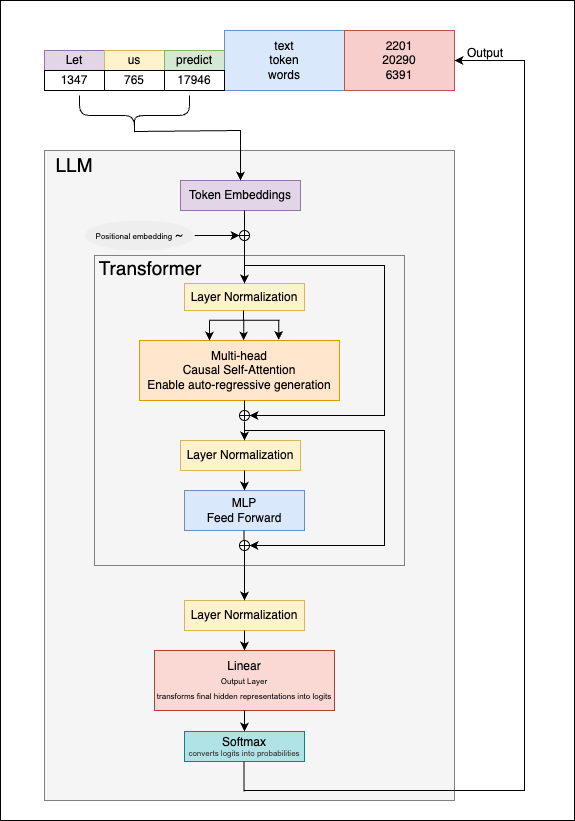

As shown in the diagram, an LLM processes text through several key stages:

Input Tokenization

The input text (e.g., "Let us predict") is converted into numerical token IDs (1347, 765, 17946) that the model can process. Each token represents a word or subword in the model's vocabulary.

Token Embeddings

These token IDs are transformed into dense vector representations (embeddings) that capture semantic meaning. This is the first step inside the LLM processing pipeline.

Positional Embedding

Information about the position of each token is added (marked with "~" in the diagram) and combined with the token embeddings. This helps the model understand the sequence order since transformers process all tokens simultaneously.

Transformer Block

The core of the LLM contains transformer blocks with several components:

- Layer Normalization: Stabilizes inputs at various stages

- Multi-head Causal Self-Attention: Allows tokens to attend to previous tokens in the sequence, enabling auto-regressive generation

- MLP Feed Forward: Processes each position independently with a multi-layer perceptron

- Residual Connections: The "+" circles in the diagram represent residual connections that help with gradient flow during training

Output Processing

After the transformer processing:

- Final Layer Normalization: Normalizes the output of the transformer block

- Linear Output Layer: Transforms the final hidden representations into logits (raw prediction scores) for each token in the vocabulary

- Softmax: Converts these logits into probabilities, allowing the model to predict the next token

Output Generation

The model produces output with statistics as shown in the diagram: text tokens (2201), token IDs (20290), and word counts (6391). This output becomes part of the context for generating the next token in an auto-regressive manner.

Text Generation Mechanism

- The model processes the input tokens ("Let us predict" in the example)

- It predicts the next most likely token based on the probability distribution from the softmax

- The predicted token is appended to the input sequence

- This new, longer sequence becomes the input for the next prediction

- The process repeats, with the model generating text one token at a time

- The causal self-attention mechanism ensures each prediction only considers previous tokens, not future ones

LLM Types and Categories

Understanding the different types of Large Language Models and their specialized capabilities

Large Language Models come in various types, each designed for specific use cases and with different capabilities. Understanding these categories helps in selecting the right model for your AI assistant.

Text-Based LLMs (Traditional)

These models focus primarily on text generation and understanding, forming the foundation of most AI assistants.

| Type | Description | Examples | Open/Proprietary |

|---|---|---|---|

| Base LLMs | General text generation models without specific optimization | GPT-3, Llama 2, Falcon | Both |

| Chat/Instruction-tuned | Optimized for conversation and following instructions | Claude 3.5 Sonnet, GPT-4o, Llama 3 | Both |

| Reasoning-focused | Enhanced for logical thinking and step-by-step problem solving | Claude 3.7 Sonnet (reasoning mode), Gemini 1.5 Pro | Mostly Proprietary |

Reasoning Models: Adding Logic and Decision-Making

Enhancing AI capabilities with logical reasoning and problem-solving

To enhance the assistant's capabilities, we integrate reasoning models, which enable the AI to perform logical reasoning, problem-solving, and decision-making. These models add a layer of analytical thinking to the assistant's responses.

Analyze complex queries and break them down into smaller, manageable parts

Infer relationships between different pieces of information to provide comprehensive answers

Provide detailed, logical explanations for answers, making complex topics easier to understand

Example Scenario

If an employee asks, "What are the steps to set up a new project?", the assistant with reasoning capabilities can:

- Identify the type of project (software, marketing, etc.) based on context

- Retrieve relevant documentation from the knowledge base

- Analyze the documentation to extract the necessary steps

- Organize the steps in a logical sequence

- Present a detailed, step-by-step guide tailored to the employee's needs

- Anticipate potential questions and provide additional information

AI SDKs and Frameworks: Building the Assistant

Exploring the tools and libraries used to bring the AI assistant to life

To bring the assistant to life, we use a variety of AI SDKs and frameworks, each with its own domain focus. These tools provide the building blocks for creating a powerful and flexible AI assistant.

Focus: Text generation and embeddings

Use Case: Powering the generative capabilities of the assistant

Focus: Pre-trained transformer models for NLP tasks

Use Case: Fine-tuning models for specific tasks

Focus: Building applications with LLMs, including chatbots and agents

Use Case: Orchestrating the assistant's workflows

Focus: Data ingestion, indexing, and retrieval for LLM applications, particularly RAG

Use Case: Document search, knowledge management systems, and chatbots that require access to specific knowledge bases

Focus: Visualizing and debugging LangChain workflows

Use Case: Monitoring and optimizing the assistant's performance

Focus: Building conversational AI systems with Anthropic's Claude model

Use Case: Enhancing the assistant's conversational abilities

Focus: Building AI-powered web applications

Use Case: Creating a user-friendly interface for the assistant

Focus: Multi-agent systems for complex workflows

Use Case: Coordinating multiple AI agents to handle different tasks

Focus: Data validation and settings management

Use Case: Ensuring the assistant's inputs and outputs are structured and valid

Focus: End-to-end machine learning platform

Use Case: Training, deploying, and managing the assistant's models

Data Labeling and Cleaning: The Foundation of AI

Ensuring high-quality data for training and operating AI systems

The quality of the assistant depends on the quality of the data. Without clean, well-labeled data, even the most sophisticated AI models will struggle to provide accurate and helpful responses.

Adding meaningful tags or annotations to data to help the AI understand its structure and meaning.

- Labeling questions and answers in a FAQ dataset

- Categorizing documents by department or topic

- Marking entities like names, dates, and locations in text

- Annotating sentiment (positive, negative, neutral) in customer feedback

Preparing data by removing errors, inconsistencies, and irrelevant information.

- Removing duplicates to prevent bias in training

- Correcting spelling and grammatical errors

- Standardizing formats (dates, phone numbers, etc.)

- Handling missing values appropriately

- Removing personally identifiable information (PII) for privacy

The Impact of Data Quality

The quality of data directly affects the performance of the AI assistant in several ways:

- Accuracy: Clean, well-labeled data leads to more accurate responses

- Relevance: Properly categorized data helps the assistant find the most relevant information

- Bias Reduction: Careful data preparation helps minimize biases in the assistant's responses

- Efficiency: Well-structured data enables faster retrieval and processing

Building Data Pipelines: Automating the Workflow

Streamlining data collection, cleaning, and processing for AI systems

To streamline the process, we build data pipelines that automate the collection, cleaning, and processing of data. Tools like Apache Airflow and Pandas help us create efficient workflows for managing the data that powers our AI assistant.

1. Data Collection

Gather data from various sources, including:

- Internal documents and knowledge bases

- Customer support tickets and FAQs

- Product documentation and specifications

- Employee feedback and questions

2. Data Cleaning and Preprocessing

Prepare the data for use in the AI system:

- Remove duplicates and irrelevant information

- Standardize formats and correct errors

- Extract key entities and relationships

- Convert data into a consistent format

3. Data Storage

Store the processed data in a structured format for easy retrieval:

- Vector databases for semantic search

- Document databases for structured content

- Knowledge graphs for complex relationships

- Metadata indexes for efficient filtering

Key Tools for Data Pipelines

Apache Airflow

A platform to programmatically author, schedule, and monitor workflows. It allows you to define complex data pipelines as code and visualize their execution.

Pandas

A Python library for data manipulation and analysis. It provides data structures and functions needed to manipulate structured data efficiently.

Apache Spark

A unified analytics engine for large-scale data processing. It can handle massive datasets across distributed clusters.

Elasticsearch

A distributed, RESTful search and analytics engine capable of addressing a growing number of use cases. It can be used to store and retrieve documents efficiently.

Putting It All Together: The AI Assistant

Combining all components to create a powerful knowledge assistant

After integrating all the components, the AI assistant is ready to help employees find information quickly and efficiently. Let's see how the different technologies work together to create a powerful knowledge assistant.

Combines retrieval and generation for accurate, human-like answers. The assistant retrieves relevant information from the knowledge base and uses it to generate coherent, contextual responses.

Enables autonomous task execution and goal-oriented behavior. The assistant can take initiative, plan multi-step actions, and adapt to changing requirements.

Provide logical and step-by-step answers. The assistant can break down complex problems, infer relationships between different pieces of information, and explain its reasoning.

Power the assistant's functionality and user interface. Tools like LangChain, Vercel AI SDK, and Claude SDK provide the building blocks for creating a seamless user experience.

Benefits for Employees

- Time Savings: Employees can find information in seconds instead of minutes or hours

- Improved Accuracy: The assistant provides reliable, fact-based answers

- Contextual Understanding: The assistant understands the context of questions and provides relevant responses

- 24/7 Availability: The assistant is always available to help, regardless of time or location

- Continuous Learning: The assistant improves over time as it learns from interactions and new information

The Future: Expanding the Assistant's Capabilities

Looking ahead to the next generation of AI assistants

The journey doesn't end here. As AI technology continues to evolve, we can expand the assistant's capabilities in several exciting directions.

Processing text, images, and audio to provide more comprehensive assistance. The assistant could analyze diagrams, screenshots, and other visual content to better understand and respond to queries.

Handling more complex queries and decision-making tasks. The assistant could help with strategic planning, complex problem-solving, and sophisticated data analysis.

Updating the assistant's knowledge base in real-time. The assistant could automatically incorporate new information, learn from user interactions, and adapt to changing business needs.

Key Takeaways

Essential concepts and technologies for building AI-powered knowledge assistants

- Transformers are the backbone of modern AI systems.

These neural networks are designed to handle sequential data and capture long-range dependencies, making them ideal for language processing tasks.

- RAG Architecture combines retrieval and generation for accurate answers.

By retrieving relevant information from a knowledge base and using it to generate responses, RAG provides both accuracy and fluency.

- Agentic AI enables autonomous and goal-oriented behavior.

AI systems that can plan, execute tasks, and adapt to new information provide more sophisticated assistance.

- MCP standardizes how applications provide context to LLMs, enabling seamless integration with data sources and tools.

This open protocol makes it easier to connect AI models to different data sources and tools, similar to how USB-C standardizes device connections.

- MCP Server provides tools, resources, and capabilities to clients.

The server-side implementation of MCP exposes tools, manages resources, and handles prompt requests.

- MCP Client manages connections and interactions with MCP servers.

The client-side implementation handles protocol negotiation, tool discovery, and resource access.

- Spring-AI MCP Server simplifies the development of MCP servers in Spring Boot applications.

This integration provides auto-configuration and both synchronous and asynchronous APIs for Java-based environments.

- Reasoning Models add logic and decision-making capabilities.

These models enable the AI to perform logical reasoning, problem-solving, and provide step-by-step explanations.

- AI SDKs like LangChain, LlamaIndex, Claude SDK, and Vertex AI provide the tools to build and deploy AI systems.

These libraries and frameworks simplify the development of AI applications by providing pre-built components and abstractions.

- Data Labeling, Cleaning, and Pipelines ensure high-quality data for training and operation.

The quality of the AI assistant depends on the quality of the data it's trained on and has access to.